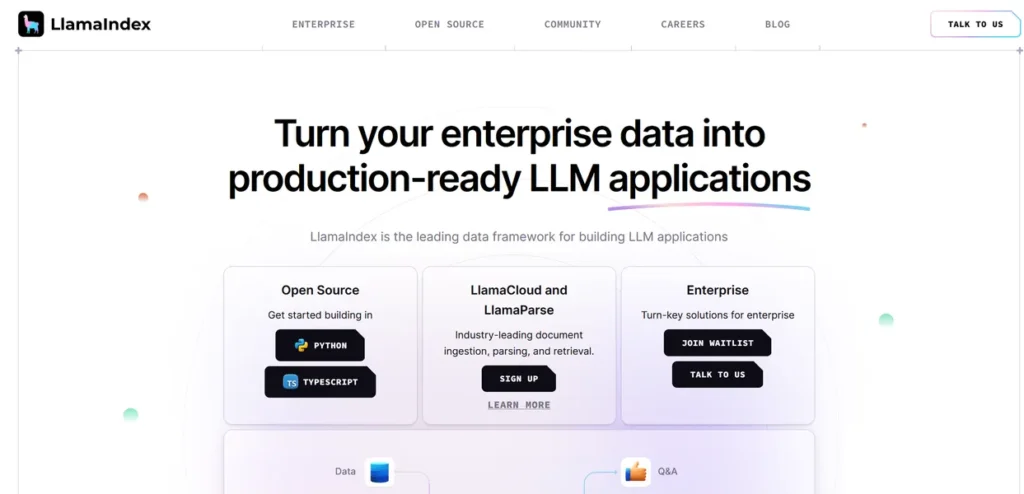

What is LlamaIndex?

From a developer’s standpoint, LlamaIndex is a foundational data framework engineered to connect custom data sources with large language models (LLMs). Its primary function is to facilitate the development of context-aware, production-grade applications through Retrieval-Augmented Generation (RAG). It provides a structured, high-performance toolkit that handles the entire data pipeline, from ingesting and indexing diverse data formats to executing complex queries. This allows engineering teams to build applications that can reason over private or domain-specific information, effectively bridging the gap between proprietary data and the generative capabilities of modern LLMs.

Key Features and How It Works

LlamaIndex operates as a systematic pipeline, providing developers with modular components to manage data for LLM applications. Its architecture is designed for flexibility and scalability, allowing for precise control over each stage of the RAG lifecycle.

- Data Connectors & Loading: The framework provides over 160 pre-built connectors that streamline data ingestion from a vast array of sources, including APIs, PDFs, SQL/NoSQL databases, and document stores like Confluence or SharePoint. This significantly reduces the boilerplate code required to load and parse disparate data types.

- Indexing and Storage: Once data is loaded, LlamaIndex structures it into an intermediate representation (Nodes) and builds indices for efficient retrieval. It integrates seamlessly with more than 40 vector store providers like Pinecone and Weaviate, as well as document and graph stores. This allows developers to select the optimal storage and retrieval backend based on their application’s specific latency, cost, and scale requirements.

- Query Engine: The query engine is the core component for interacting with the indexed data. It translates natural language questions into optimized retrieval operations, fetches the most relevant context from the data store, and synthesizes it into a coherent prompt for the LLM. The framework supports advanced query transformations and routing for handling complex, multi-step reasoning.

- Observability and Evaluation: For production systems, LlamaIndex incorporates tools for evaluating the performance of the RAG pipeline. This includes metrics for assessing retrieval relevance (hit rate, MRR) and response quality. This focus on observability is critical for debugging, optimizing, and maintaining application performance over time.

Pros and Cons

Pros:

- Modularity and Extensibility: Its component-based architecture allows developers to swap out key parts—like the LLM, embedding models, or vector store—with minimal code changes, preventing vendor lock-in and enabling high degrees of customization.

- Deep Integration Ecosystem: The extensive library of connectors for data sources and storage backends ensures that LlamaIndex can be integrated into nearly any existing enterprise tech stack, saving significant development time.

- Production-Ready Focus: Features like response evaluation and observability are not afterthoughts; they are core to the framework, providing the necessary tools to build, monitor, and maintain reliable, high-quality LLM applications at scale.

- Active Open-Source Community: A vibrant community contributes to rapid development, robust documentation, and a valuable knowledge base for troubleshooting complex implementations.

Cons:

- Steep Learning Curve: As a comprehensive framework rather than a simple library, mastering its abstractions—such as nodes, index structures, and query engines—requires a dedicated investment of time.

- Potential for Abstraction Leaks: For highly specialized or performance-critical use cases, developers may find themselves needing to delve into the framework’s source code to achieve the desired behavior, bypassing some of its high-level APIs.

- Resource Overhead: Building and maintaining indices for large-scale datasets is computationally intensive and can lead to substantial cloud infrastructure and storage costs, which must be factored into project planning.

Who Should Consider LlamaIndex?

LlamaIndex is best suited for technical teams building sophisticated LLM-powered applications. Software and machine learning engineers will find it indispensable for creating RAG systems, internal knowledge base search tools, and complex AI agents. Data science teams can leverage it to rapidly prototype and deploy LLM-driven analytics tools. For enterprises, the framework provides a scalable and secure solution for integrating LLMs with complex, proprietary data ecosystems. Startups can also benefit by accelerating the development of core AI features without building the entire data pipeline from the ground up.

Pricing and Plans

LlamaIndex operates on a freemium model, offering a powerful open-source framework alongside managed cloud services for teams that require additional support and scalability.

- Free Tier: The core LlamaIndex framework is open-source and completely free. This plan is ideal for individual developers, researchers, and teams getting started with building LLM applications, providing full access to the library’s features with community support.

- Pro Plan ($9/month): Aimed at professional developers and small teams, this tier typically corresponds to managed services built on top of the framework. It offers enhanced features, higher usage limits, and dedicated support for building and deploying applications at a larger scale.

For the most current and detailed pricing information, please consult the official LlamaIndex website.

What makes LlamaIndex great?

How do you effectively bridge the gap between your proprietary, unstructured data and the abstract reasoning power of a large language model? LlamaIndex excels by providing a structured, modular framework engineered to solve this exact problem. Its primary strength lies in its unopinionated and highly extensible architecture. Unlike more rigid solutions, it does not lock developers into a specific LLM, vector database, or data format. This architectural freedom is paramount for long-term project viability and adapting to the rapidly evolving AI landscape. Furthermore, its focus on the full RAG lifecycle—from ingestion and indexing to retrieval and evaluation—provides an end-to-end toolchain that is essential for moving from a simple prototype to a resilient, production-grade application.

Frequently Asked Questions

- How does LlamaIndex differ from LangChain?

- LlamaIndex is specialized in the data pipeline for RAG systems, offering deep, granular control over data ingestion, indexing, and retrieval. LangChain is a broader framework for building general-purpose LLM applications by chaining calls and managing agents. While they have overlapping features, LlamaIndex provides more depth for data-intensive tasks, and the two can be integrated to leverage the strengths of both.

- Can LlamaIndex be used for real-time applications?

- Yes, LlamaIndex can power real-time applications, but performance is contingent on the chosen index structure, data volume, and the latency of the underlying vector database. Achieving real-time performance requires careful optimization of the retrieval pipeline and selecting a high-throughput vector store, both of which the framework is designed to support.

- Is LlamaIndex only for Python developers?

- While the most mature and feature-complete version is written in Python, a parallel TypeScript/JavaScript library (LlamaIndex.TS) is actively developed. This makes the framework’s core capabilities accessible to full-stack and front-end developers building applications in the Node.js or browser environment.

- What level of technical expertise is required to use LlamaIndex?

- Implementing a basic RAG application requires intermediate Python proficiency. However, to fully exploit its advanced features, such as customizing data loaders, building complex query engines, or optimizing a pipeline for production, a strong software engineering background and a solid understanding of data structures are highly recommended.