## Run:ai – Bridging the Gap Between ML Teams and AI Infrastructure

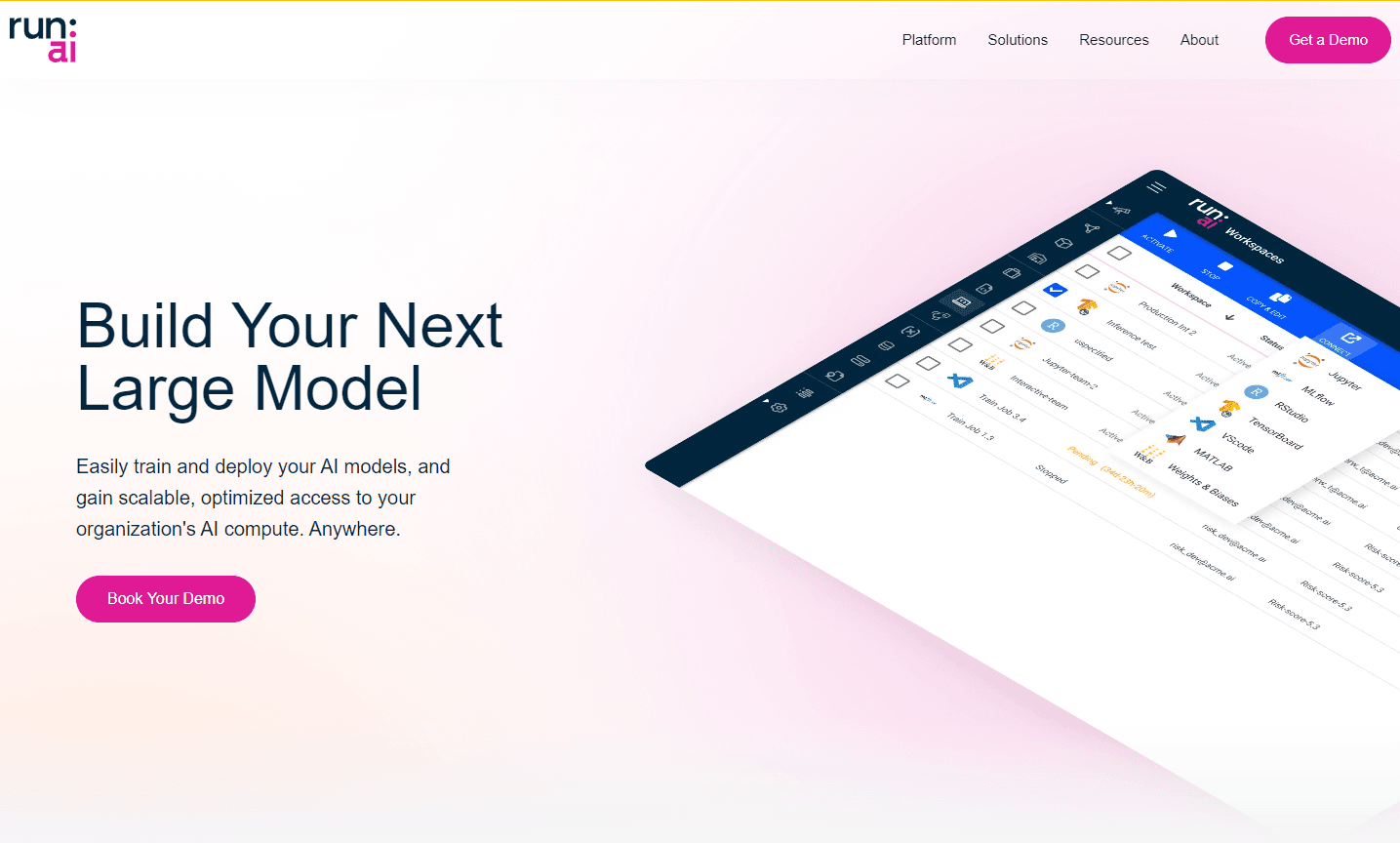

Run:ai offers a unified platform that abstracts infrastructure complexities and simplifies access to AI compute. This platform is designed to train and deploy models across various environments, including clouds and on-premises. By integrating with popular tools and frameworks, Run:ai leverages unique scheduling and GPU optimization technologies to enhance the entire ML journey, from building to training, and deploying models.

### Key Features: –

– Unified AI Lifecycle Platform: Seamlessly integrates with preferred tools and frameworks, providing comprehensive support throughout the AI lifecycle.

– Data Preprocessing Scalability: Efficiently scale data processing pipelines across multiple machines with built-in integration for frameworks like Spark, Ray, Dask, and Rapids.

– GPU Optimization Technologies: Maximize GPU infrastructure utilization through GPU fractioning, oversubscription, and bin-packing scheduling.

– Dynamic Resource Management: Features like dynamic quotas, automatic GPU provisioning, and fair-share scheduling ensure optimal resource allocation.

– AI Cluster Enhancement: Monitor and control infrastructure across different environments, bolstered by security features like policy enforcements and access control.

### Ideal Use Case:

Enterprises and organizations that are deeply involved in AI and ML development, particularly those that require efficient management and optimization of GPU resources.

### Why use Run:ai:

– Efficient AI Development: Quickly provision preconfigured workspaces and scale ML workloads with ease.

– Optimized GPU Utilization: Advanced features ensure maximum GPU usage and efficient job scheduling.

– Broad Integration Capabilities: Compatible with a range of AI tools, frameworks, and NVIDIA AI Enterprise software.

– Robust Security Measures: A trusted platform with features ensuring data protection, compliance, and organizational asset protection.

### tl;dr:

Run:ai provides a comprehensive platform that simplifies the AI lifecycle. With its advanced GPU optimization and resource management features, it ensures efficient AI development and maximizes returns on AI investments.